The Smart Systems Institute (SSI) at the National University of Singapore is an interdisciplinary research institute and an experimental “playground" for human-centered AI technologies. Our vision is to enable situated AI assistance for every human to work, play, and learn. The research at SSI spans the spectrum of embodied AI, robotics, interaction design, augmented/virtual reality. The institute brings together researchers from diverse backgrounds, including AI, engineering, design, social sciences, to collaborate and to shape the future of AI.

Director's Message

I hope SSI will be a place to experience research for researchers, students, and the general public alike. It will be a place where human intelligence and machine intelligence fuse, to create innovations for the future. It will be a place where human creativity and dignity are celebrated. This is our dream. Join us.

AdaComp Lab

Our long-term goal is to understand the fundamental computational questions that enable fluid human-robot interaction, collaboration, and ultimately co-existence. Our current research focuses on robust robot decision-making under uncertainty by integrating planning and machine learning.

Augmented Human Lab

At Augmented Human Lab, we are shifting from adding an ‘accessibility layer’ to technology. Instead, we fundamentally rethink how systems can be designed to align with user's abilities and expectations. We call this Assistive Augmentation. We believe carefully designed Assistive Augmentations can empower people constrained by impairments to live more independently and extend one's perceptual, cognitive and creative capabilities beyond the ordinary.

CLeAR Lab

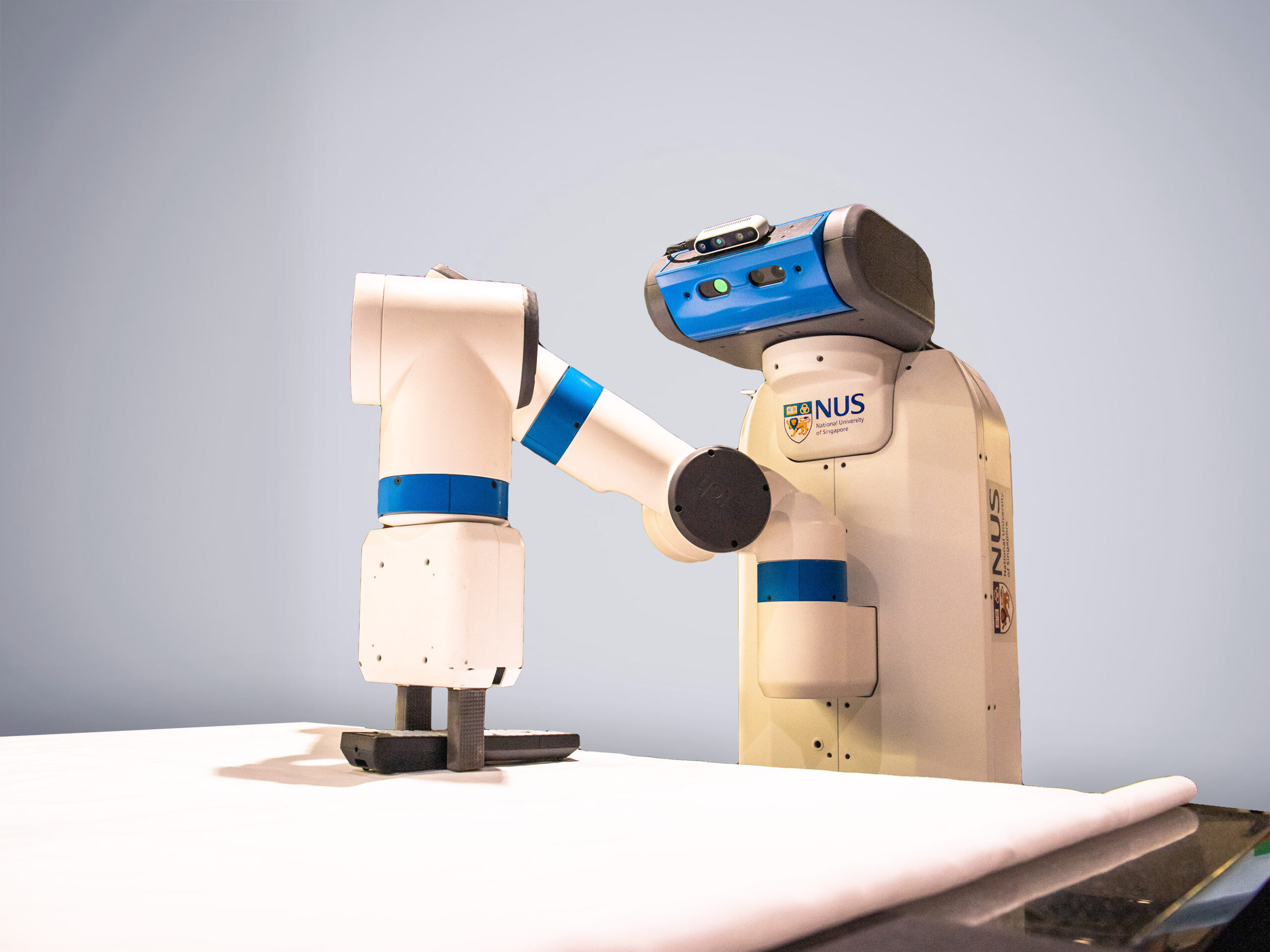

At CLeAR, we seek to improve people’s lives through intelligent robotics. We advance the science and engineering of collaborative robots that fluently interact with us to perform tasks. Our central focus has been on developing physical and social skills for robots. For the former, we’re working on new tactile perception and control methods for robots. In the latter, we’re developing better human trust models and social-projection-based communication.

Keio-NUS CUTE Center

Keio-NUS CUTE (Connective Ubiquitous Technology for Embodiments) Center is a joint collaboration between National University of Singapore (NUS) and Keio University, Japan. The center is supported by the National Research Foundation, Prime Minister’s Office, Singapore under its International Research Centres in Singapore Funding Initiative, administrated through the Infocomm Media Development Authority (IMDA) of Singapore.

Principal Investigators

Affiliates

HQ

Engineering Team

Research

AISee

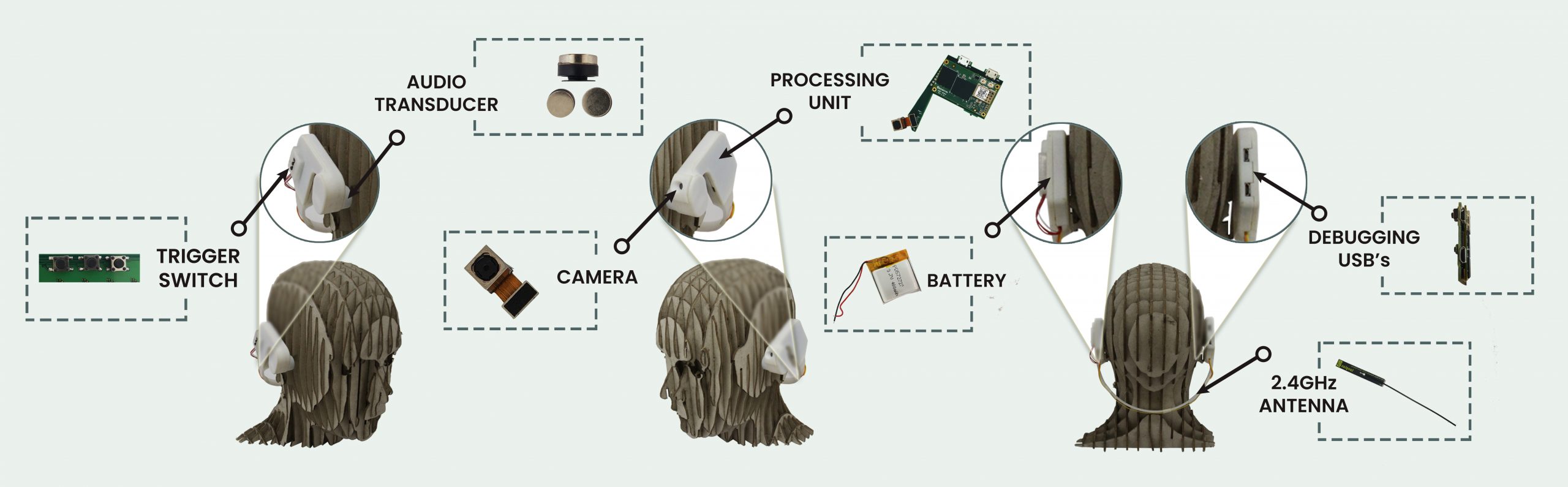

Accessing visual information in a mobile context is a major challenge for the blind as they face numerous difficulties with existing state-of-the-art technologies including problems with accuracy, mobility, efficiency, cost, and more importantly social exclusion. The Project AiSee aims to create an assistive device that sustainably change how the visually impaired community can independently access information on the go. It is a discreet and reliable bone conduction headphone with an integrated small camera, that uses Artificial Intelligence, to extract and describe surrounding information when needed. The user simply needs to point and listen.Assistive Human-Robot/AI Communication

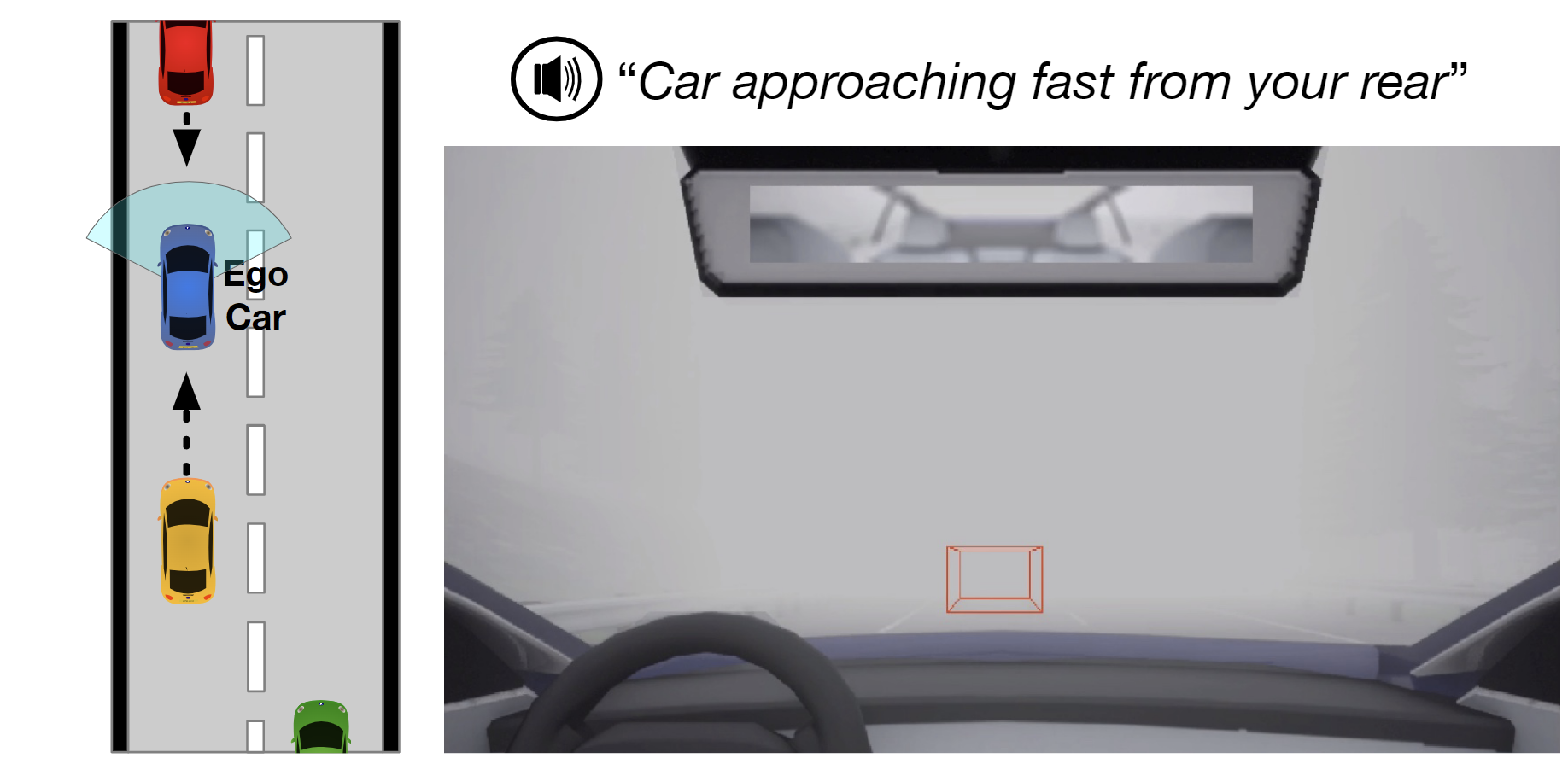

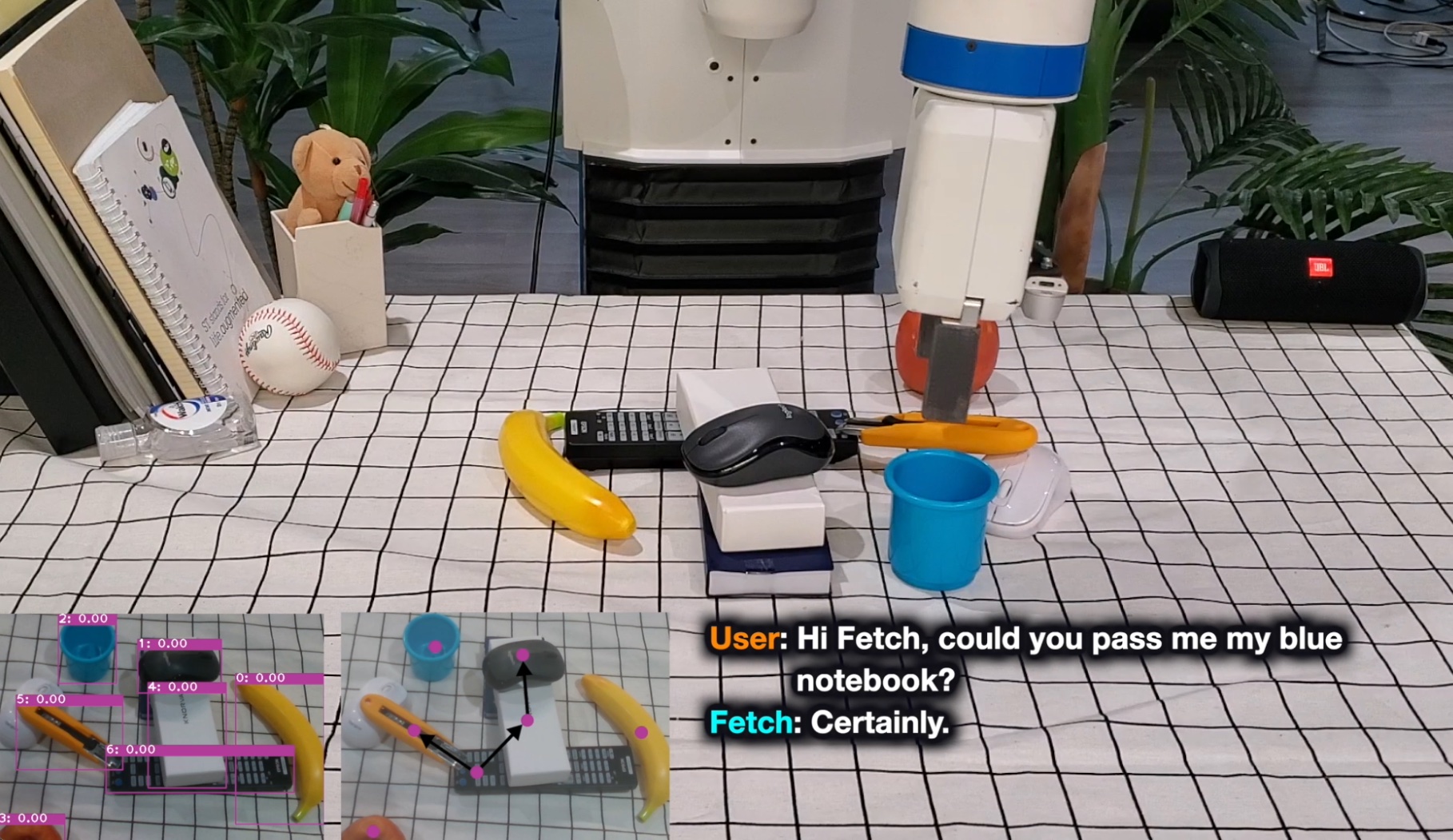

Communication is an essential skill for intelligent agents; it facilitates cooperation and coordination, and enables teamwork and joint problem-solving. However, effective communication is challenging for robots; given a multitude of information that can be relayed in different ways, how should the robot decide what, when, and how to communicate? We aim to develop artificial agents that can communicate effectively with people by leveraging human models.Crisis Management Simulation (CMS)

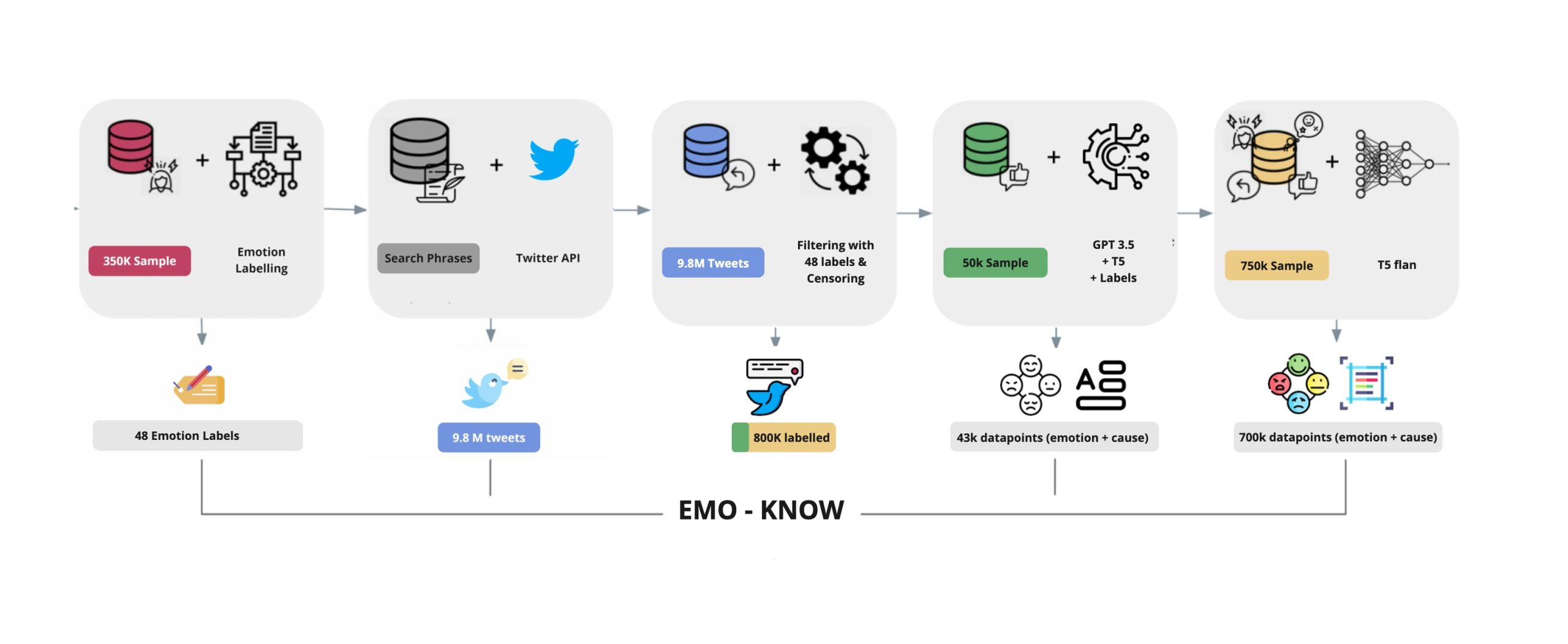

Crisis Management Simulation (CMS) is a multi-sensory XR training system that aims to educate medical trainees on the triage procedure in a fully guided, virtual environment, in which he interacts with a physical manikin that is represented in a one-to-one precise mapping in virtual space. The project aims to use physical embodiments to recreate the tactile feel of their digital twin in physical space, to provide an added dimension for hyper-realism in training.EMO-KNOW

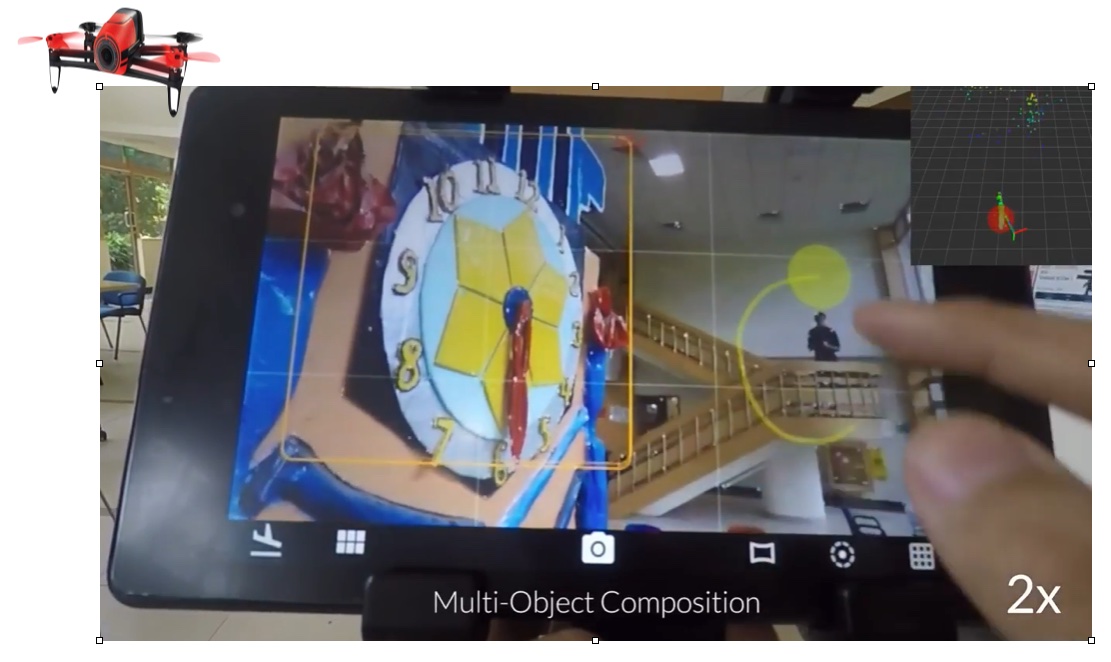

Emotion-Cause analysis has attracted the attention of researchers in recent years. However, most existing datasets are limited in size and number of emotion categories. They often focus on extracting parts of the document that contain the emotion cause and fail to provide more abstractive, generalizable root cause. To bridge this gap, we introduce EMO-KNOW: a large-scale dataset of emotion causes, derived from 9.8 million cleaned tweets over 15 years. We extract emotion labels and provide abstractive summarization of the events causing emotions. EMO-KNOW comprises over 700,000 tweets with corresponding emotion-cause pairs spanning broad spectrum of 48 emotion classes, validated by human evaluators. EMO-KNOW will enable the design of emotion-aware systems that account for the diverse emotional responses of different people for the same event.FlyCam

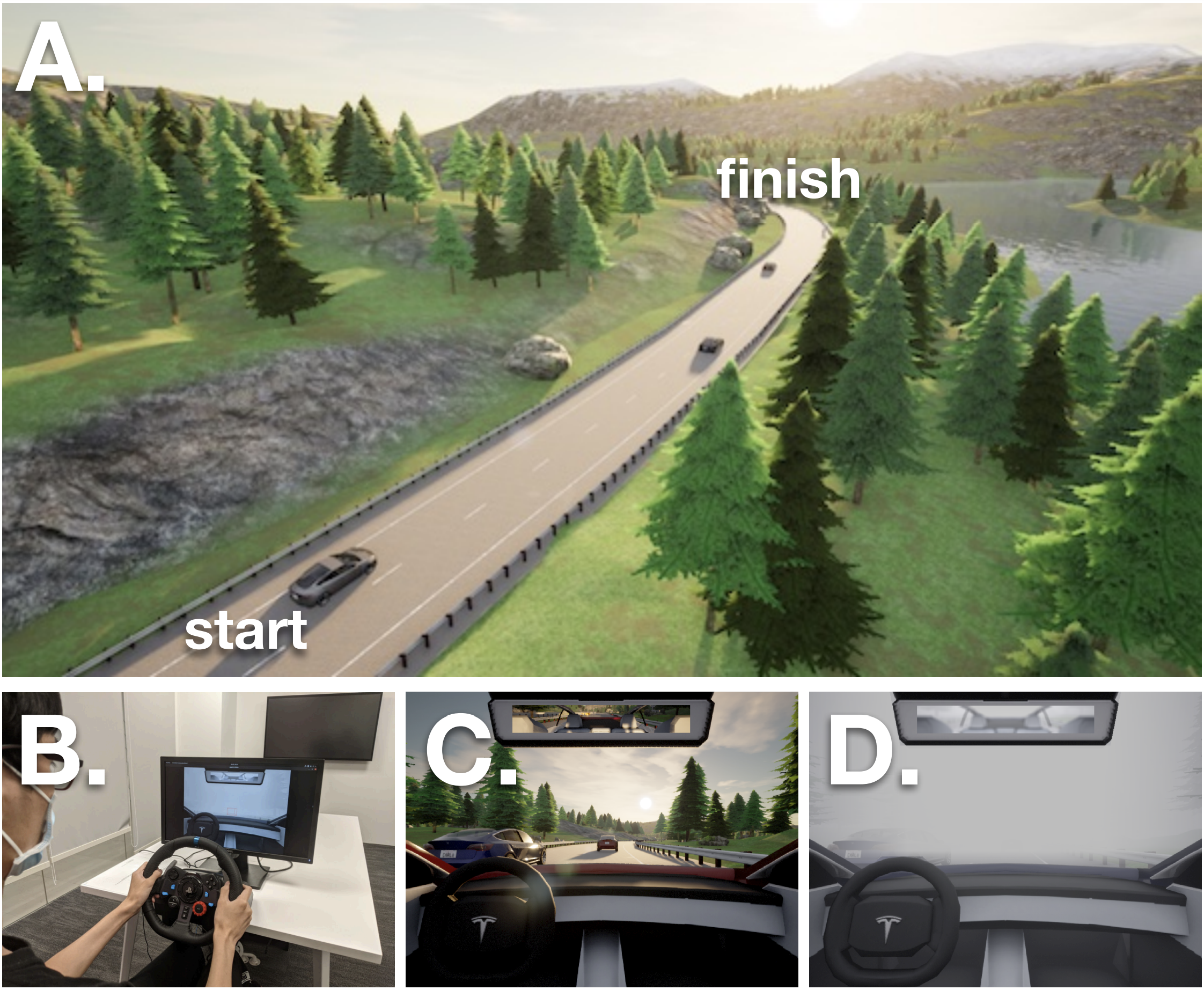

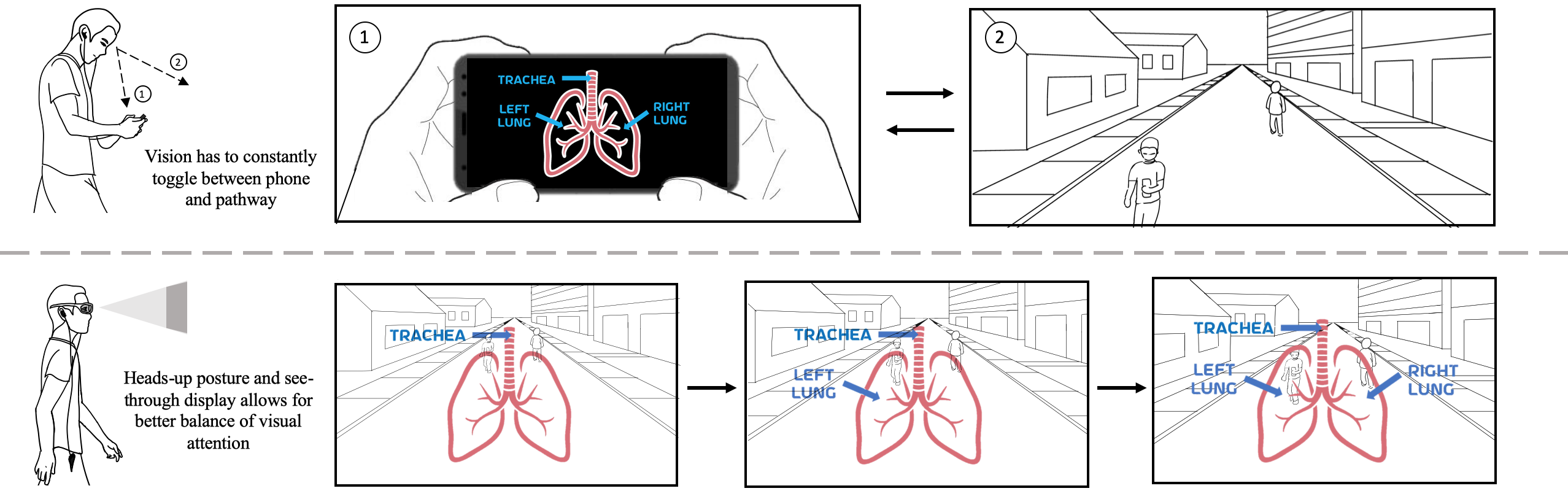

In the future, many people may take along compact flying cameras—a camera mounted on a flying drone—and use their touchscreen mobile devices as viewfinders to take photos. This project explores the user interaction design and system implementation issues for a flying camera, which leverages the autonomous flying capability of a drone-mounted camera for a great photo-taking experience.Heads-Up Computing

Our vision in Being is to fundamentally improve the way humans interact with technology through Heads-Up Computing – the next interaction paradigm built on a wearable platform consisting of head, hand, and body modules that distributes the input and output capabilities of the device to match the human input and output channels. It proposes a voice + gesture multimodal interaction approach. Once realized, Heads-Up computing can transform the way we work and live – imagine being able to attend to and manipulate information seamlessly while engaging in a variety of indoor and outdoor daily activities such as cooking, exercising, hiking, socializing with friends, etc.iTILES

iTILES is an assistive playware device designed to maintain and improve the functional and cognitive abilities of its users through game-based learning. It comprises a set of 16 physical tiles (8 floor tiles and 8 wall tiles), a tablet application, and a web-based analytics platform.

Users can interact with each tile by shaking or touching/stepping on it. Corresponding tiles respond to users by lighting up, vibrating, and/or producing sounds based on the customized game settings. Currently, there are three games, and any combination of floor and wall tiles can be used, offering various play possibilities.

To use iTILES, a game supervisor (e.g., therapist) can set up and customize games through a tablet device. Users, such as children with autism, participate by interacting with the tiles. After game sessions, the game supervisor can access analytics through an online dashboard.

Kavy

Kavy is a poetic voice companion crafted to enrich the language-learning journey. Unlike traditional voice assistants, Kavy engages users in poetic and human-like conversations, providing a unique approach to language acquisition. With adaptability at its core, Kavy caters to learners of all levels, fostering sustained dialogues and boosting confidence in verbal expression. Accessible through a user-friendly mobile app on various platforms, Kavy offers a practical and enjoyable way to enhance your language skills. Explore a personalized language experience with Kavy and improve linguistic proficiency.

LSVP: On-the-go Learning with Dynamic Information

The ubiquity of mobile phones allows video content to be watched on the go. However, users' current on-the-go video learning experience on phones is encumbered by issues of toggling and managing attention between the video and surroundings, as informed by our initial qualitative study. To alleviate this, we explore how combining the emergent smart glasses (Optical Head-Mounted Display or OHMD) platform with a redesigned video presentation style can better distribute users' attention between learning and walking tasks. We evaluated three presentation techniques: highlighting, sequentiality, and data persistence to find that combining sequentiality and data persistence is highly effective, yielding a 56% higher immediate recall score compared to a static video presentation. We also compared the OHMD against smartphones to delineate the advantages of either platform for on-the-go video learning in the context of everyday mobility tasks. We found that OHMDs improved users' 7-day delayed recall scores by 17% while still allowing 5.6% faster walking speed, especially during complex mobility tasks. Based on the findings, we introduce Layered Serial Visual Presentation (LSVP) style, which incorporates sequentiality, strict data persistence, and transparent background, among other properties, for future OHMD-based on-the-go video learning. We are also creating a tool to help users to create LSVP style videos from existing Khan Academy style videos.Paracentral and Near-Peripheral Visualizations

Optical see-through Head-Mounted Displays (OST HMDs, OHMDs) are known to facilitate situational awareness while accessing secondary information. However, information displayed on OHMDs can cause attention shifts, which distract users from natural social interactions. We hypothesize that information displayed in paracentral and near-peripheral vision can be better perceived while the user is maintaining eye contact during face-to-face conversations. Leveraging this idea, we designed a circular progress bar to provide progress updates in paracentral and near-peripheral vision. We compared it with textual and linear progress bars under two conversation settings: a simulated one with a digital conversation partner and a realistic one with a real partner. Results show that a circular progress bar can effectively reduce notification distractions without losing eye contact and is more preferred by users. Our findings highlight the potential of utilizing the paracentral and near-peripheral vision for secondary information presentation on OHMDs.Robi Butler

Imagine your home of the future: Robi Butler recognizes you, communicates with you verbally to understand your needs and preferences, and completes various household tasks. We are developing new robot capabilities to see, to touch, to speak, and to manipulate daily household objects.SonicVista

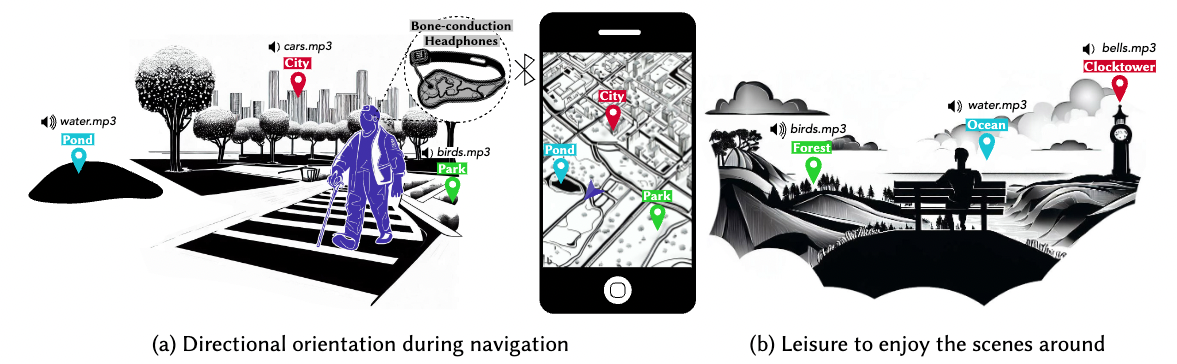

Spatial awareness, particularly awareness of distant environmental scenes known as the Vista space, is crucial and contributes to the cognitive and aesthetic needs of People with Visual Impairments (PVI). In this project, we investigate AI-based audio generative models to design sounds that can create awareness and experience of vista-space scenes.

SoniPhy

While regulation of cognitive and emotional states is important for the health and wellbeing of students and those in the workplace, tasks in the school and office settings typically demand most of of our attention.

Soni-Phy investigates whether, and how, ambient biofeedback can be used to amplify our perception of internal bodily sensations and provide continuous emotion regulation support in an unobtrusive manner. The project is grounded in research showing that the ability to accurately perceive internal sensations such as "butterflies in the stomach" has been linked to better emotion regulation and use of adaptive coping strategies. As an ambient intervention, SoniPhy also places low demands on user attention, allowing them to use it while concurrently working on a primary task.

Stackco

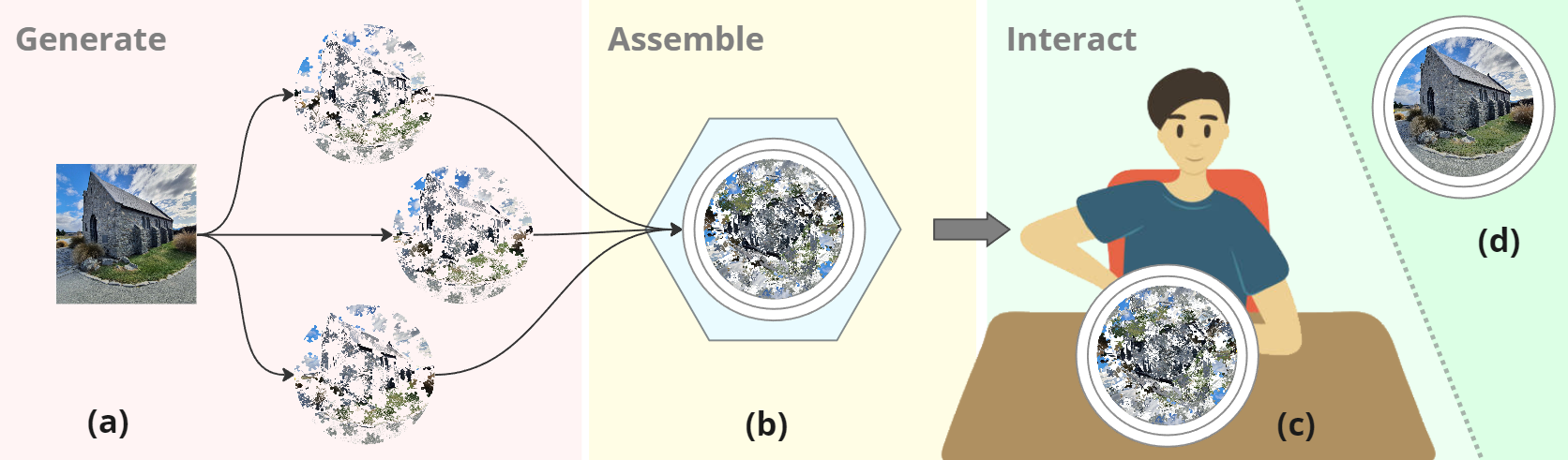

Stakco presents a patent-pending interactive puzzle experience that enables users to rotate layers of the puzzle to reveal the final image. Puzzles have long been acknowledged for their capacity to provide leisurely enjoyment, mental stimulation, and stress reduction. The well-documented cognitive benefits and the ability to foster social interactions make puzzles a versatile and valuable activity.

We produce both physical and digital puzzles and are currently exploring the applications of our proprietary software to broaden the audience engaging in puzzles. This includes potential utilization by therapists working with children with autism. The shapes and designs within each puzzle can be quickly modified to offer limitless variations. This not only increases replayability but also creates diverse and personalized experiences for individuals of all backgrounds.

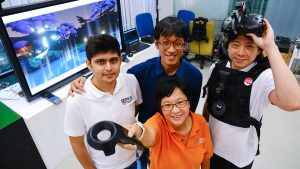

The Lost Foxfire

'The Lost Foxfire' (迷い狐火) is a multi-sensory Virtual Reality (VR) game that uses the sense of heat and smell to find and stop a Foxfire from accidentally burning down your house. Heat and smell modules are mounted onto a vest and HTC VIVE headset to create directional heat sensory cues and a more immersive gameplay for the players. Traditional games usually utilize sight and sound cues to form a player’s gaming experience. Games that engage additional senses are few, and can be explored to offer improved immersion.Toro

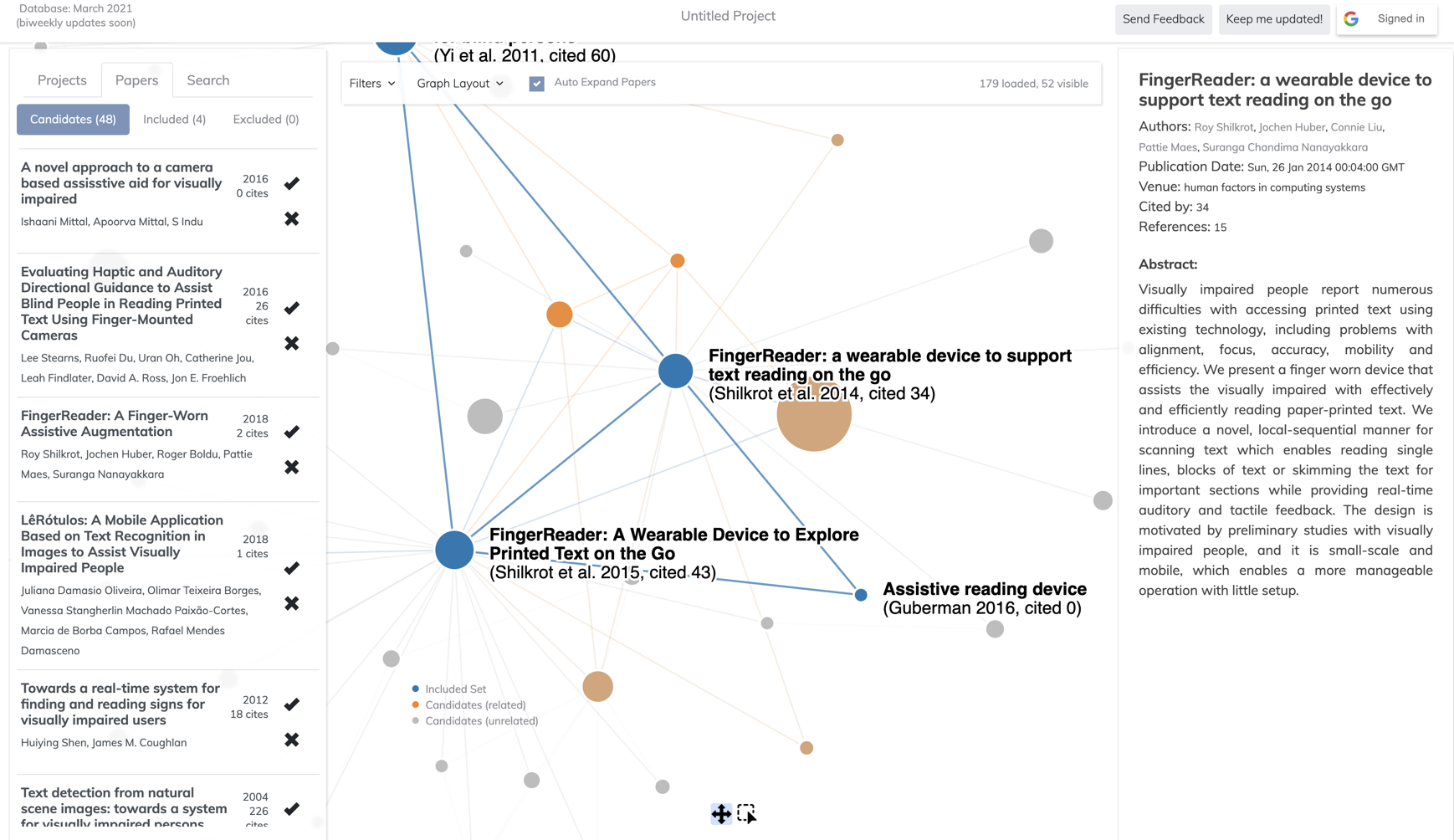

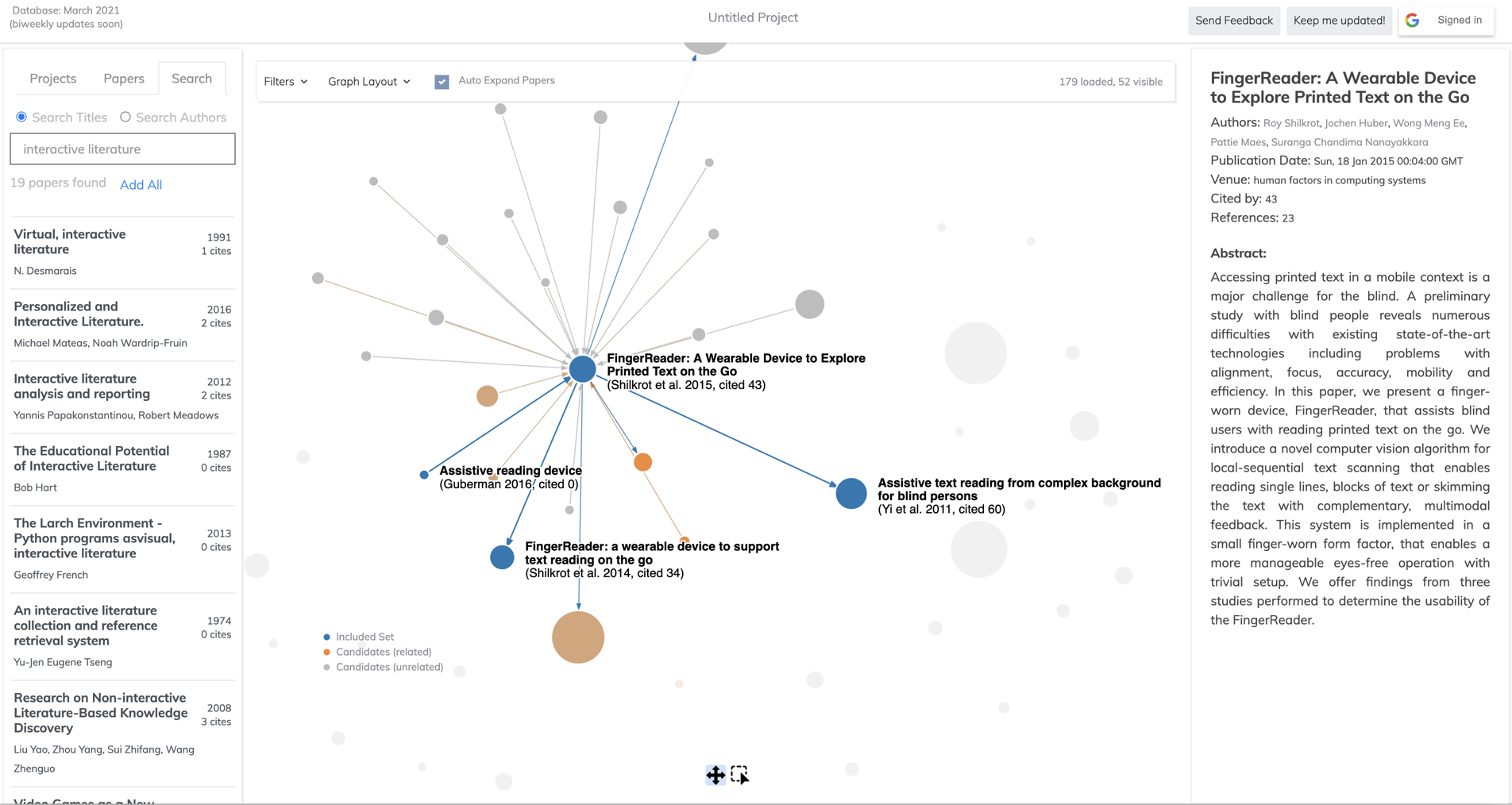

In this project we aim to develop a tool for the research community that simplifies the time-consuming literature research process by providing an interactive way of systematically exploring, analyzing, and reviewing literature. Our Interactive Literature Exploration tool, Toro, takes advantage of visualizing the relation between papers in a two-dimensional graph. Users can easily manipulate the graph, filter out undesired papers, or expand on related ones to explore relevant and interesting streams of literature.

Touch + Vision for Robots

Humans use their sense of touch for a variety of scenarios, e.g., handling delicate objects. We aim to develop robots that can use their sense of touch and vision to help humans with tasks.Virtual Cocktail

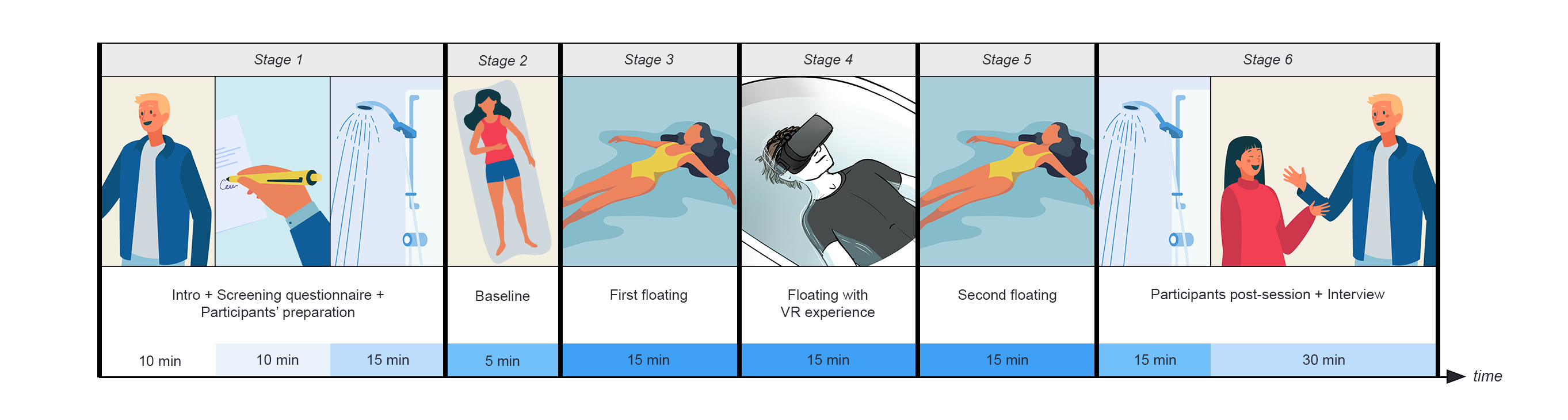

Virtual Cocktail is an interactive system capable of conveying taste, smell, and visual stimuli to augment flavor sensations. The system allows users to experience virtually flavored beverages on top of plain water or an existing drink. The experience of virtual flavors starts when the users look at the color-overlaid drink, smell it, and continues when they place their tongues on the two silver electrodes to drink while natural food aroma is delivered to their nose.XR Floatation Therapy

Could a playful extended reality floatation tank experience reduce fear of being in water? This project was conducted in collaboration with Maria Fernanda Montoya Vega (Mafe), a PhD student from Monash University (Exertion Games Lab).

Find out more at: https://exertiongameslab.org/fluito-interactive-wellness

Join Us

'The Other Me' project envisions a smart human-centric AI assistant embodied in wearable glasses, with integrated intelligence that collaborate with users on various tasks in daily life and work – such as assembling a bike from component parts.

We're always on the lookout for exceptional talent to join our team in developing reusable, modular technical components, and demo use-cases for the ToM Platform.

REQUIREMENTS

- Good knowledge and foundation in object-oriented programming

- Passion & interest for evolving fields in XR/AI/robotics, and hands-on coding skills

Depending on area of specialization:

- Experience in Unity3D/C# development, especially with AR/VR (as main development environment for the project)

- Hands on knowledge and familiarity with 2D/3D computer graphics, rendering pipeline

- Server development / data processing: Javascript/node.js, Golang

- Client-server communication: eg. websocket, RTPstreaming, ..

- Tools and scripting languages: Python

- Open-source libraries: OpenCV

- Native app development in Android / iOS Swift (for plugins to native APIs such as ARKit/ARCore)

Curious and passionate in what we do? — Do feel free to drop us an email and have a chat at idmjobs@nus.edu.sg to find out more!